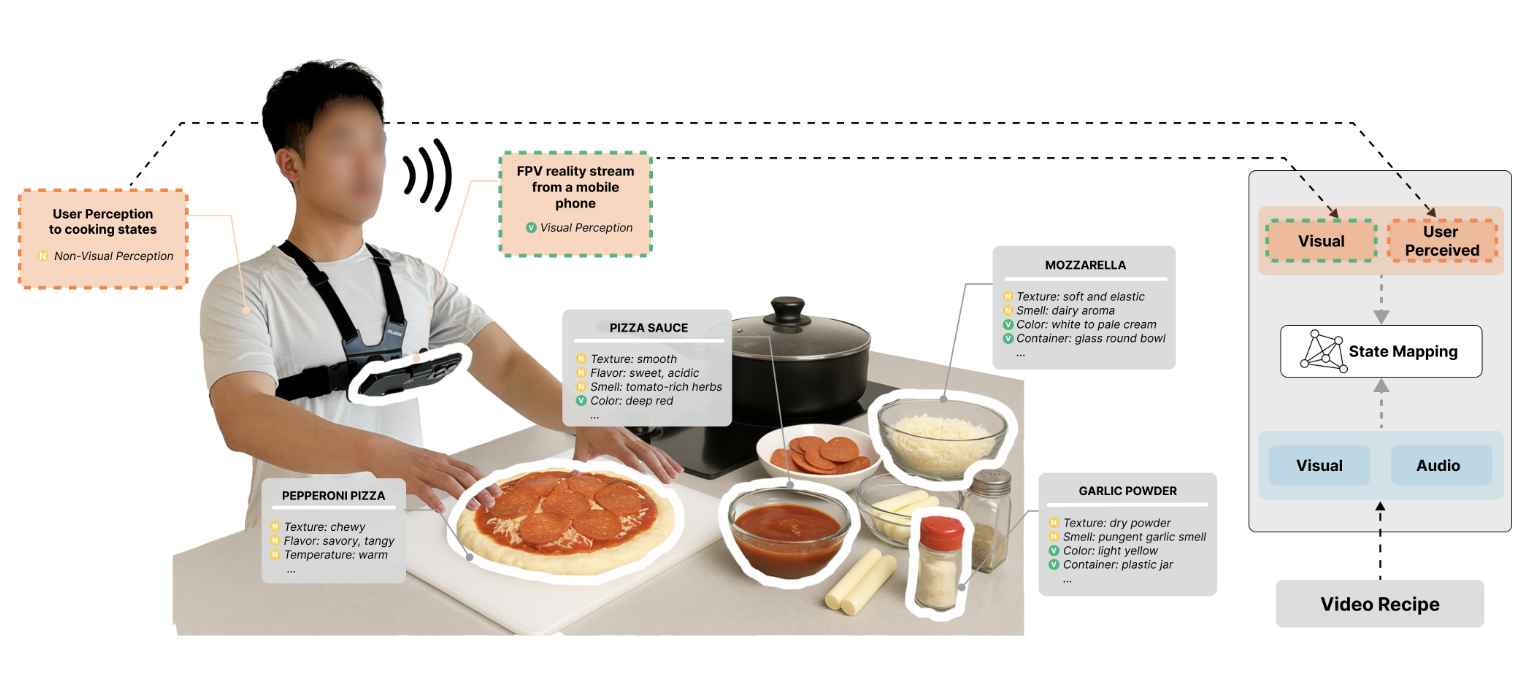

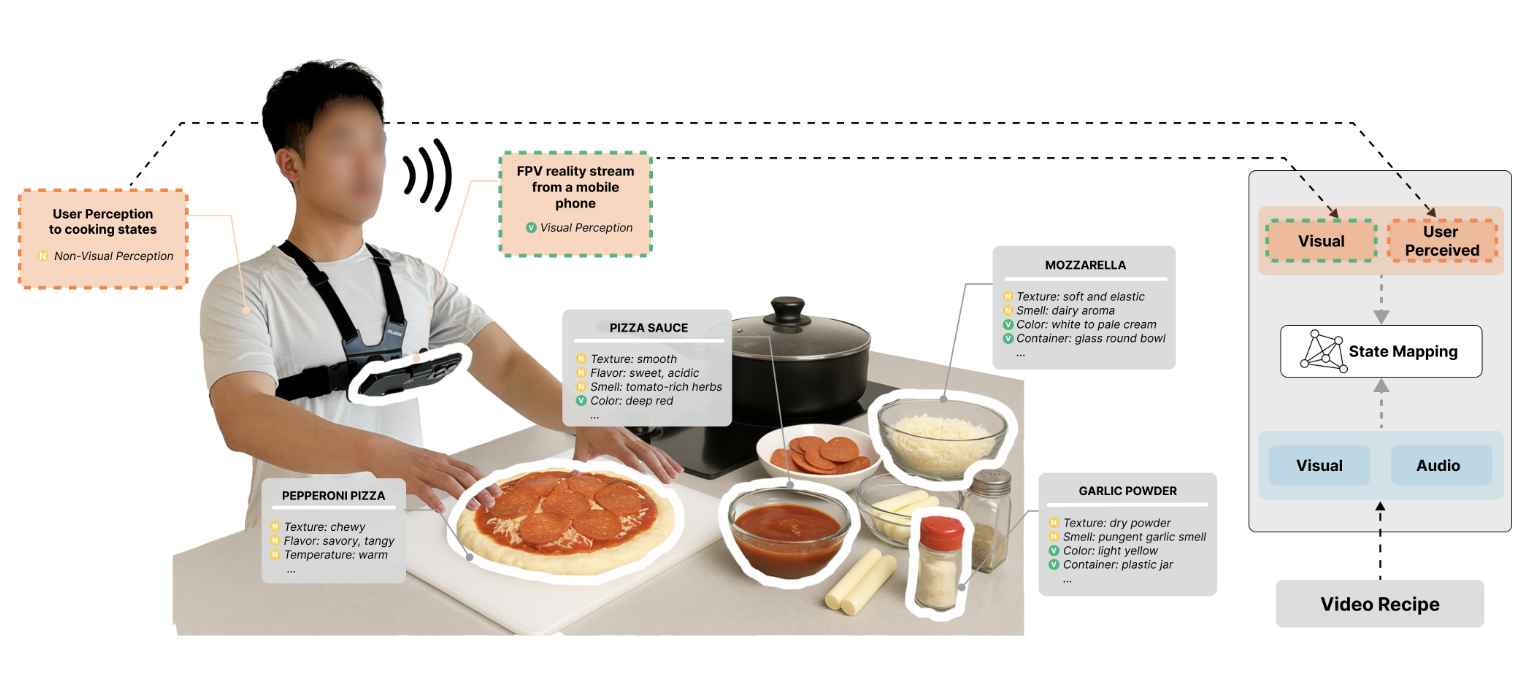

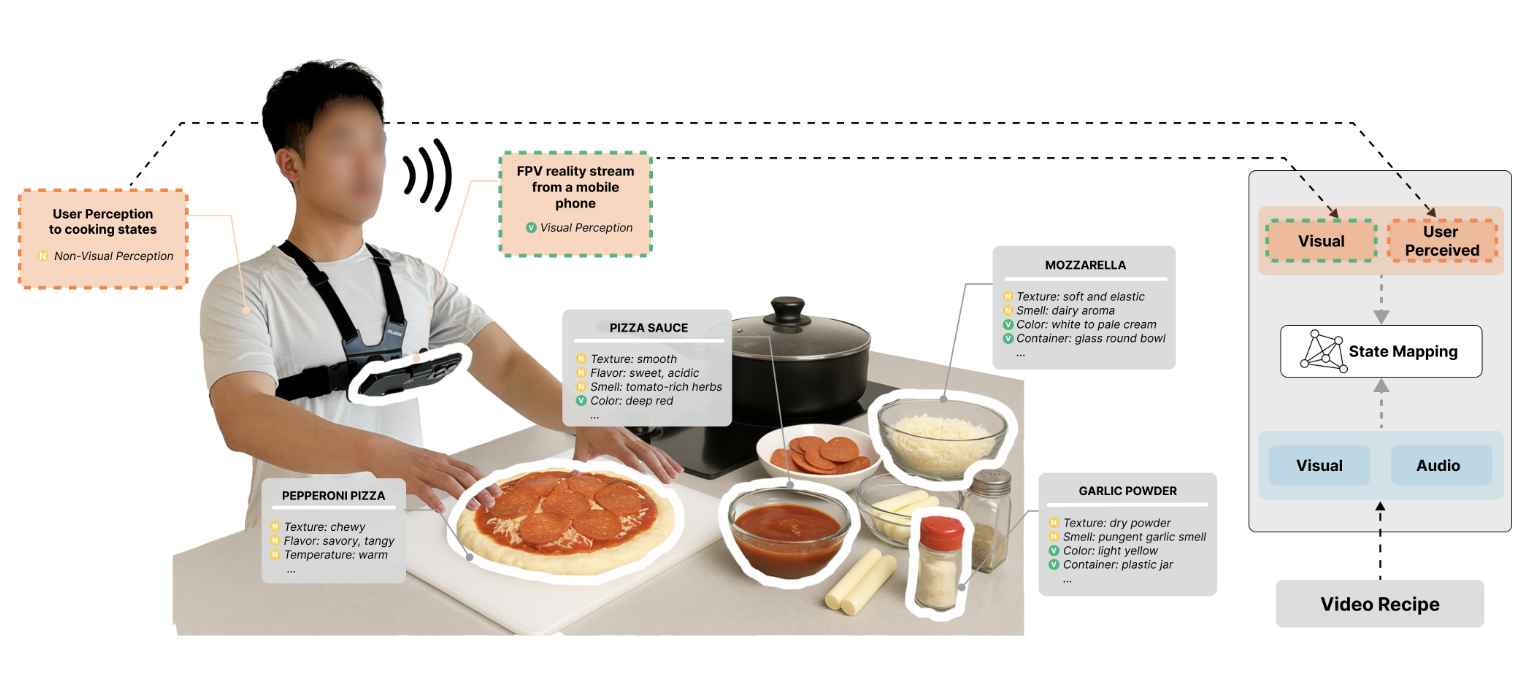

AROMA

Mixed-initiative AI assistance for non-visual cooking from videos.

Read the paperPersonal website of Ning Zheng, Applied Scientist II at Amazon. Includes research focus, projects, news, and publications.

High-contrast, large-type, low-motion layout with direct navigation for non-visual and low-vision readers.

Hi!👋👋👋 I'm Ning Zheng (宁政), currently an Applied Scientist at Amazon , P13N, building generative recommendation systems and intent understanding algorithms for Amazon homepage. I obtained my Ph.D. in Computer Science and Human-Computer Interaction (HCI) at the

University of Notre Dame

with

Prof. Toby Jia-Jun Li.

My Ph.D. research focused on building multimodal human-AI collaboration systems for augmenting user cognitive capabilities.

with

Prof. Toby Jia-Jun Li.

My Ph.D. research focused on building multimodal human-AI collaboration systems for augmenting user cognitive capabilities.

Previously worked at Adobe

and Microsoft

as a research scientist intern.

Selected projects during my Ph.D.

Mixed-initiative AI assistance for non-visual cooking from videos.

Read the paper

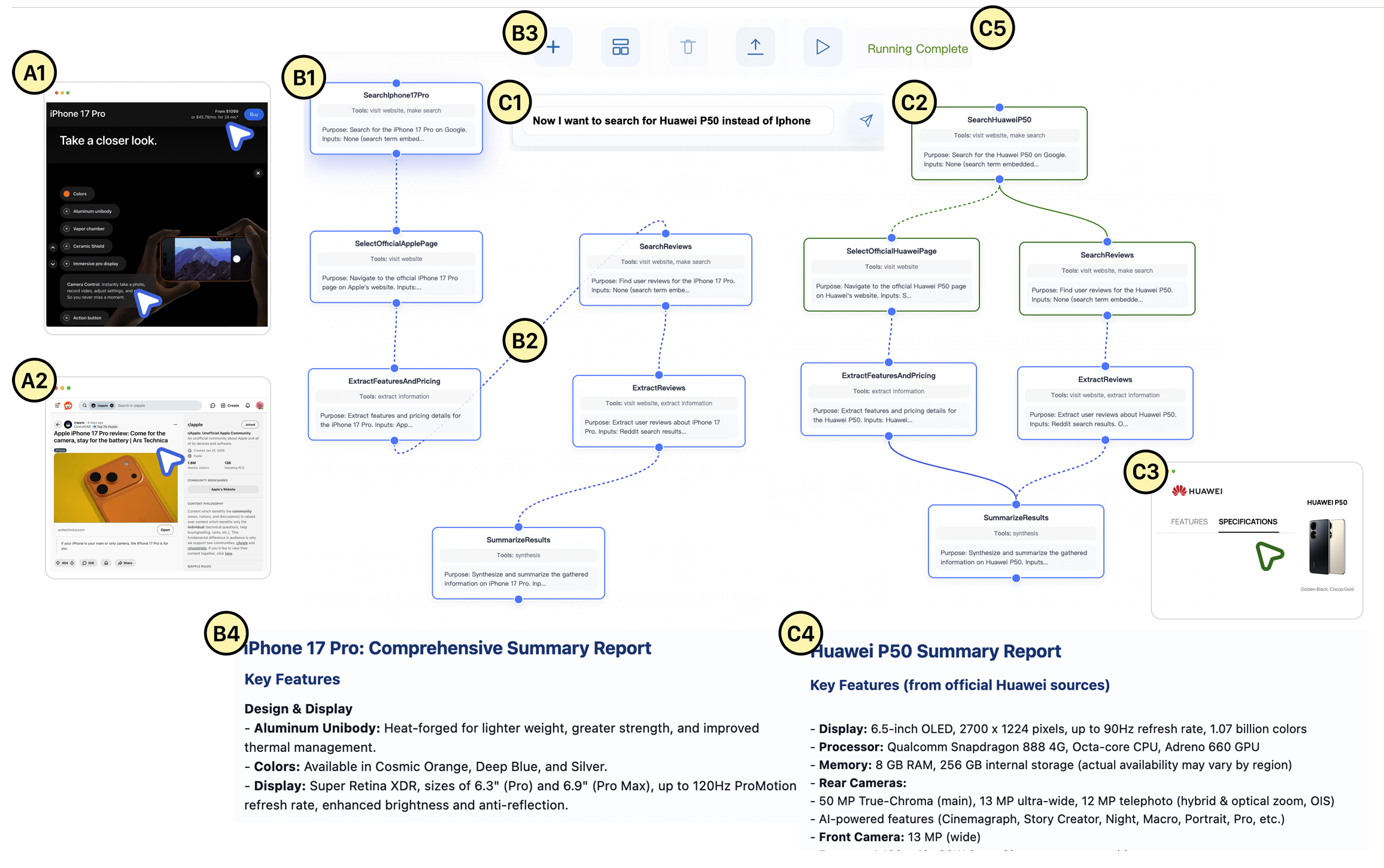

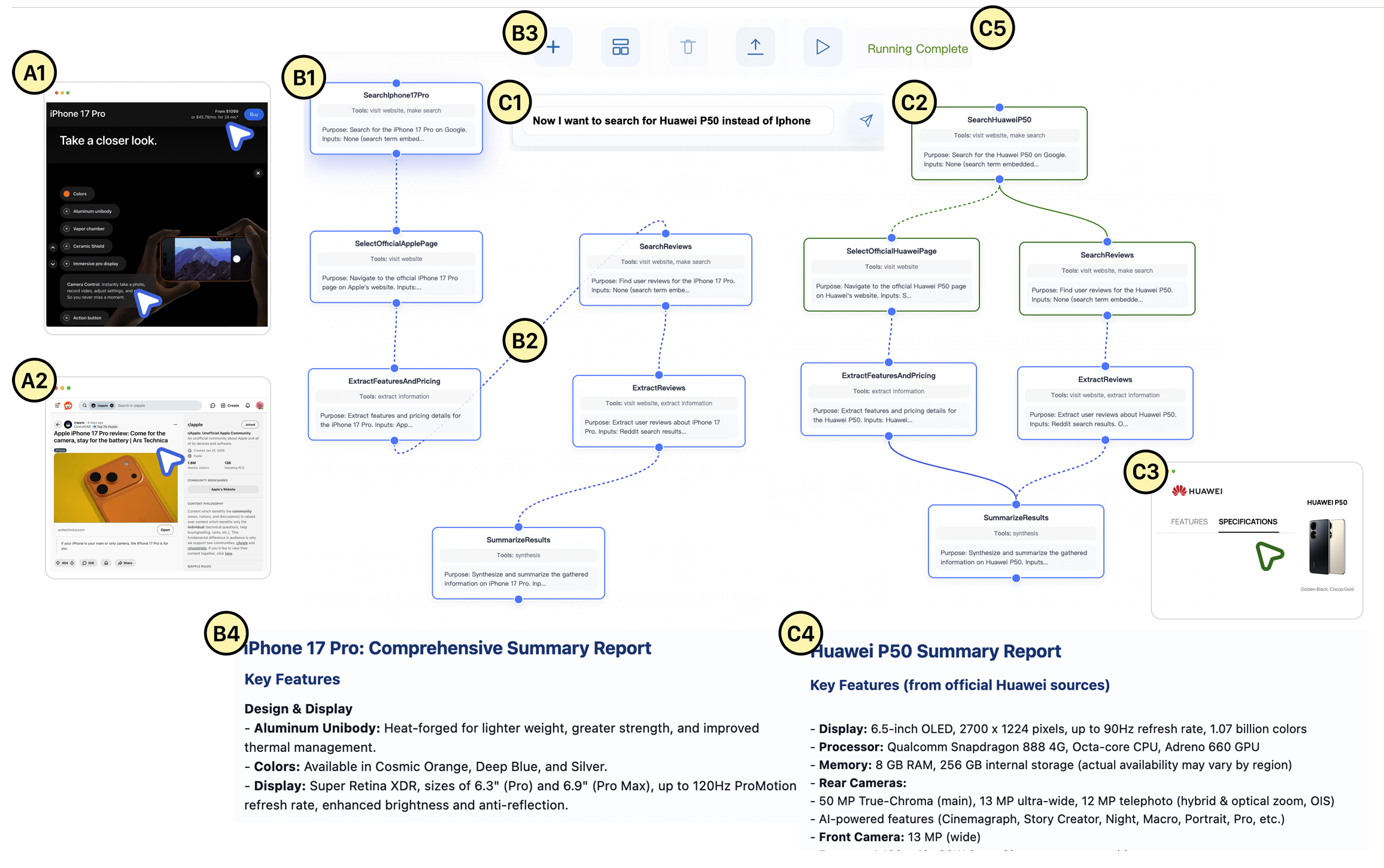

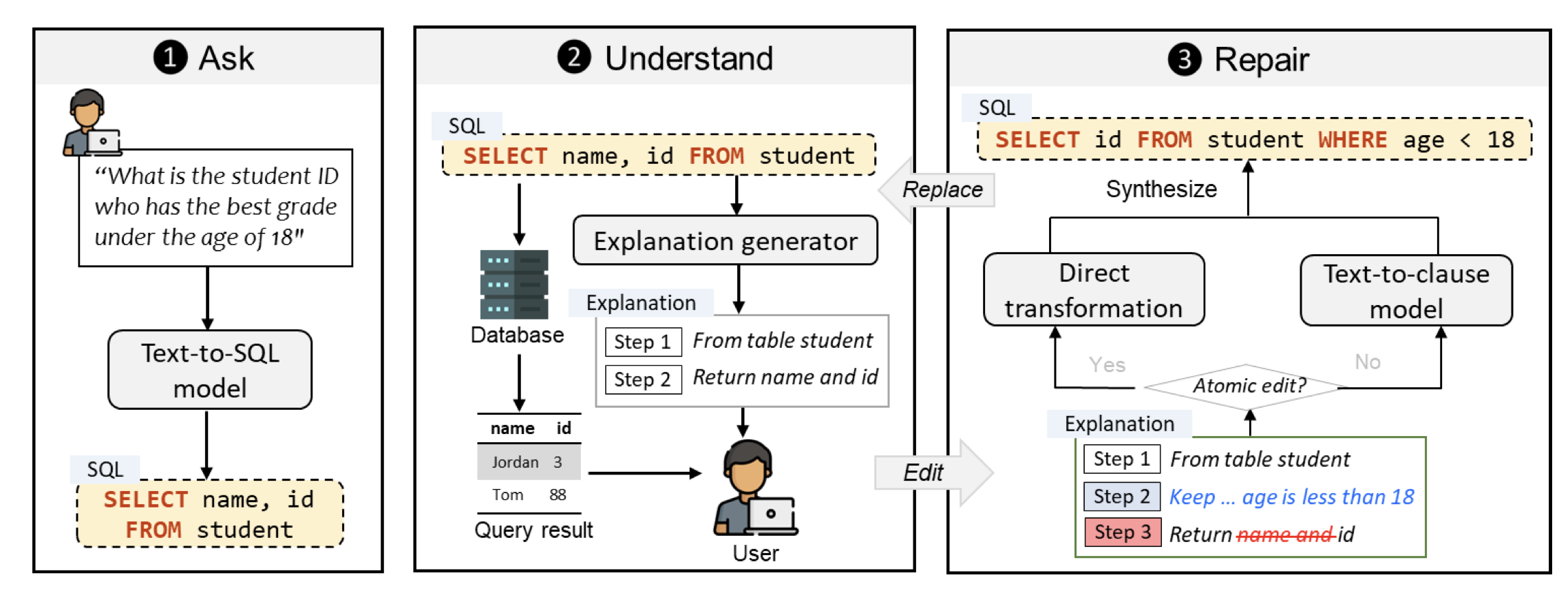

Agentic workflow generation from user demonstrations on web browsers.

Read the paper

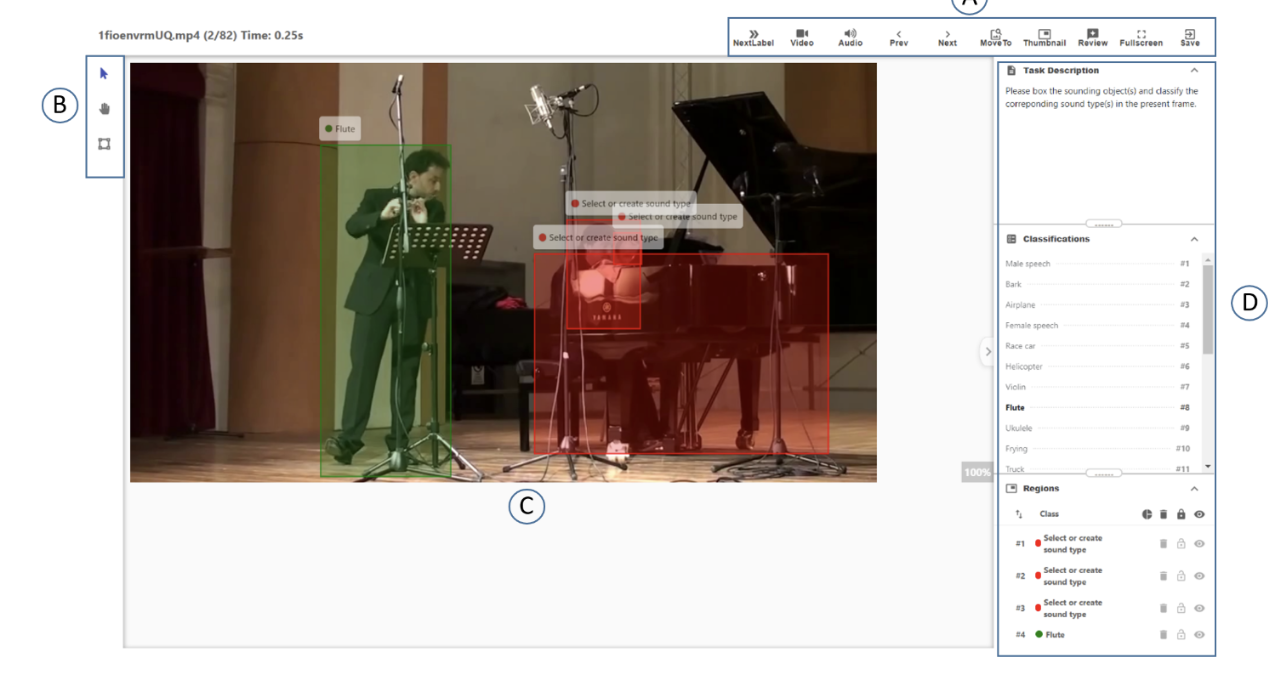

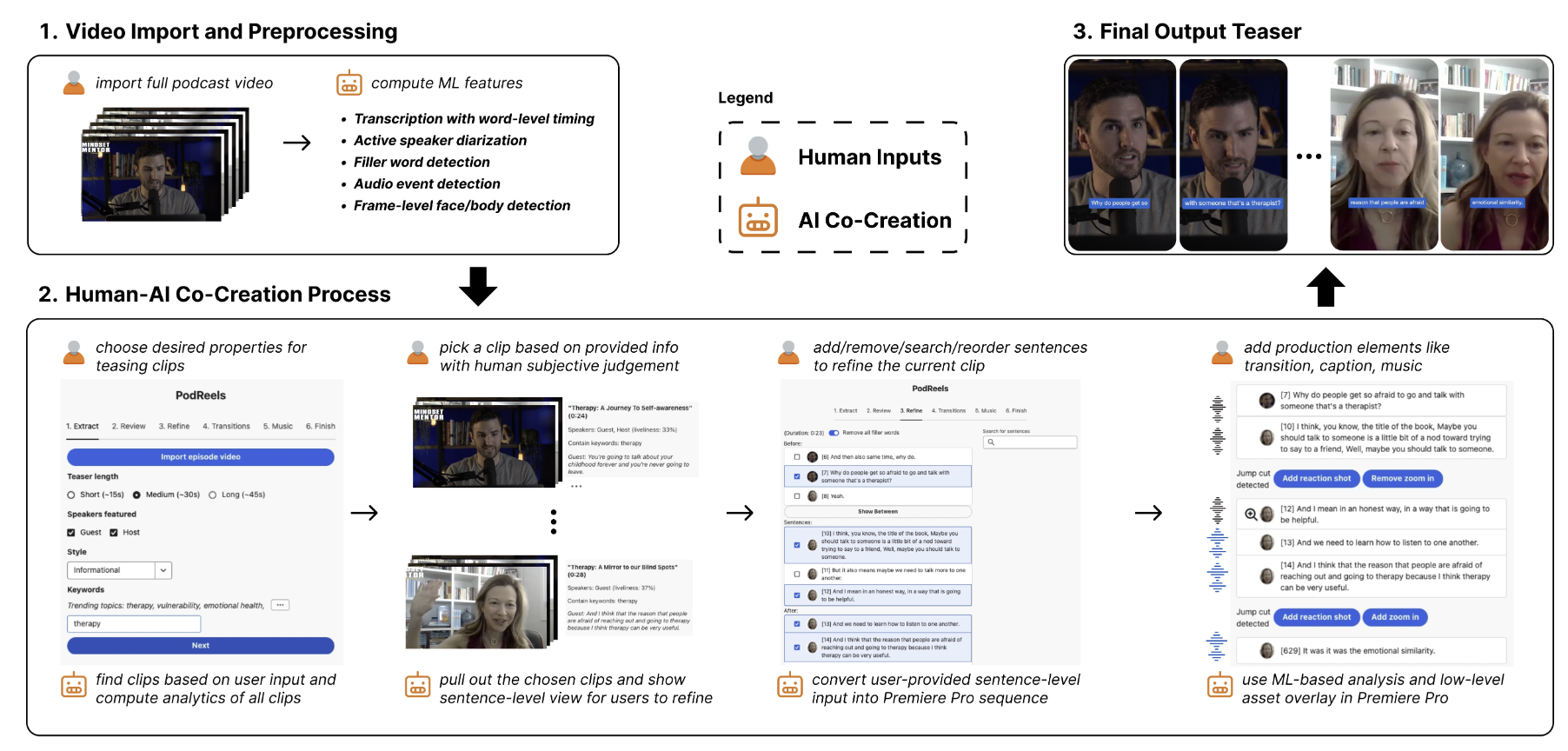

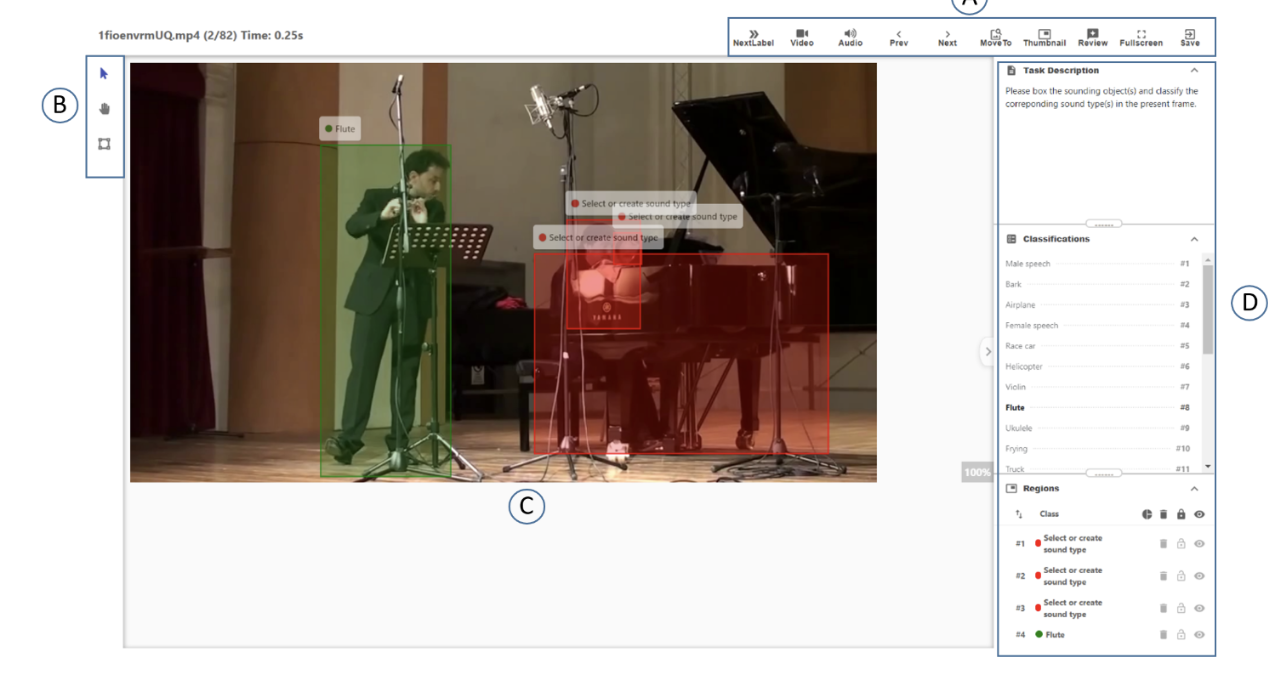

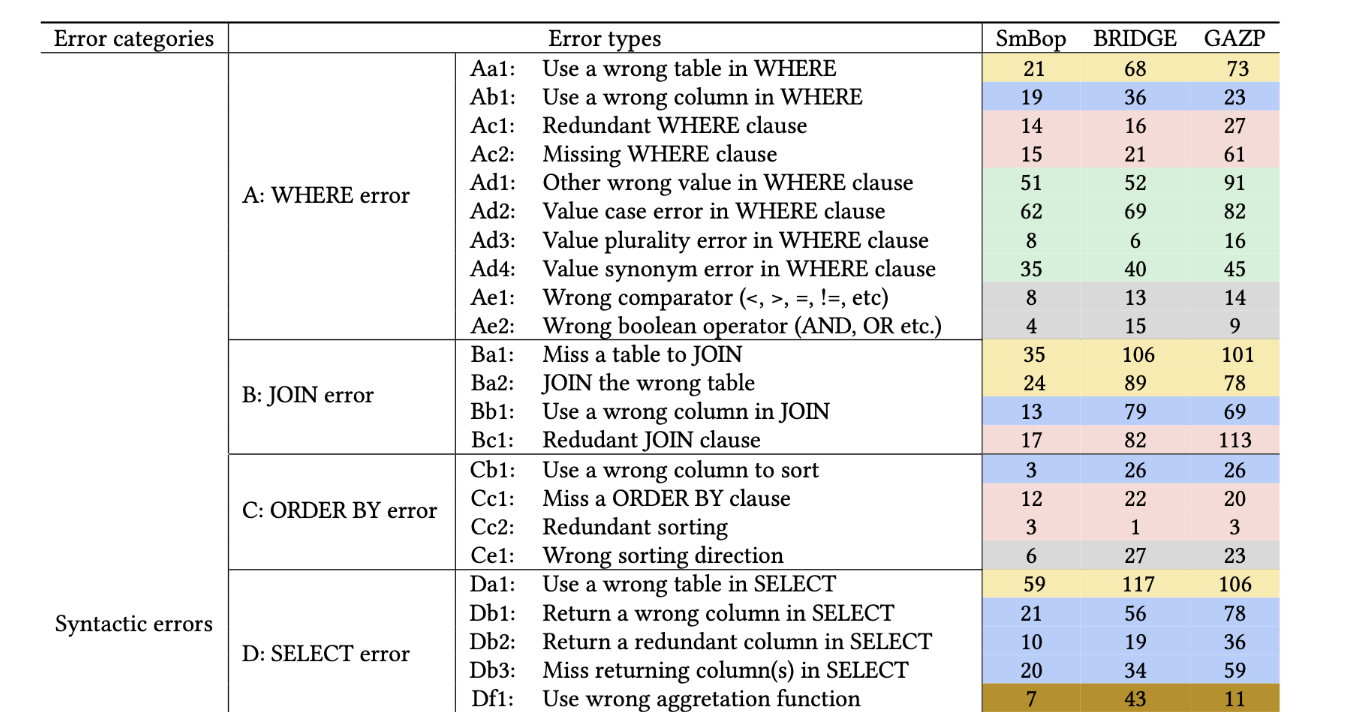

Human-AI collaboration for multimodal video annotation.

Read the paper

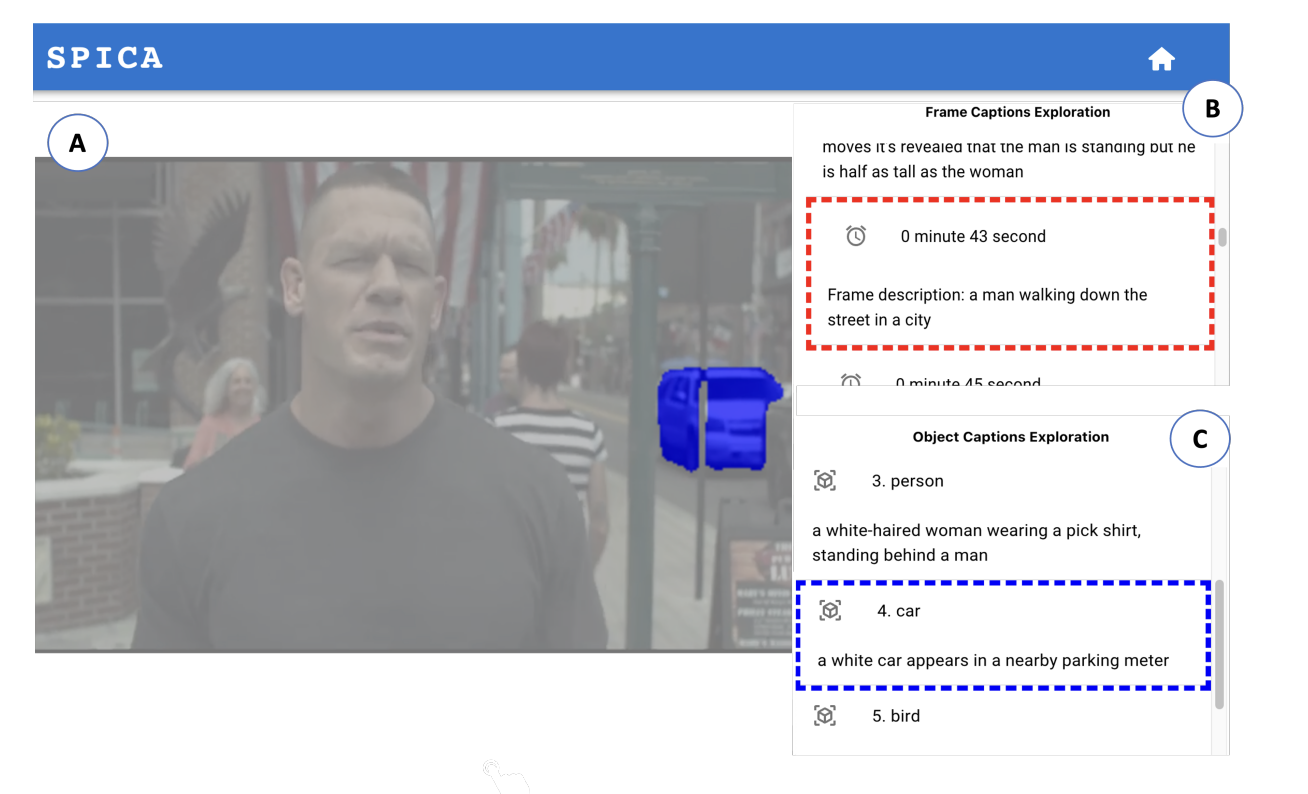

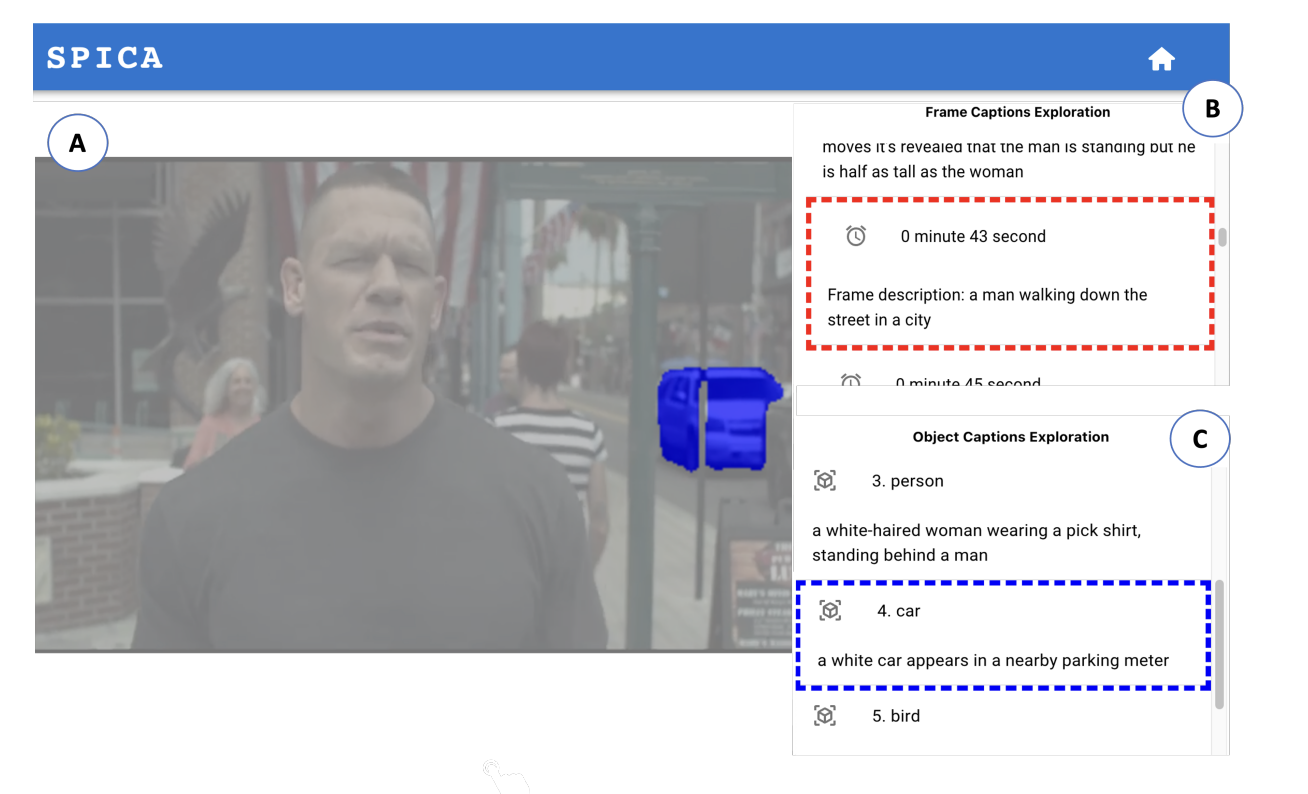

Interactive audio descriptions for blind and low-vision viewers.

Read the paper

Human-AI co-creation of spatial audio effects for video.

Read the paperPeer-reviewed papers from my research.

VL/HCC 2025

VL/HCC 2024

TiiS (IUI 2023 Special Issue)

IUI 2023