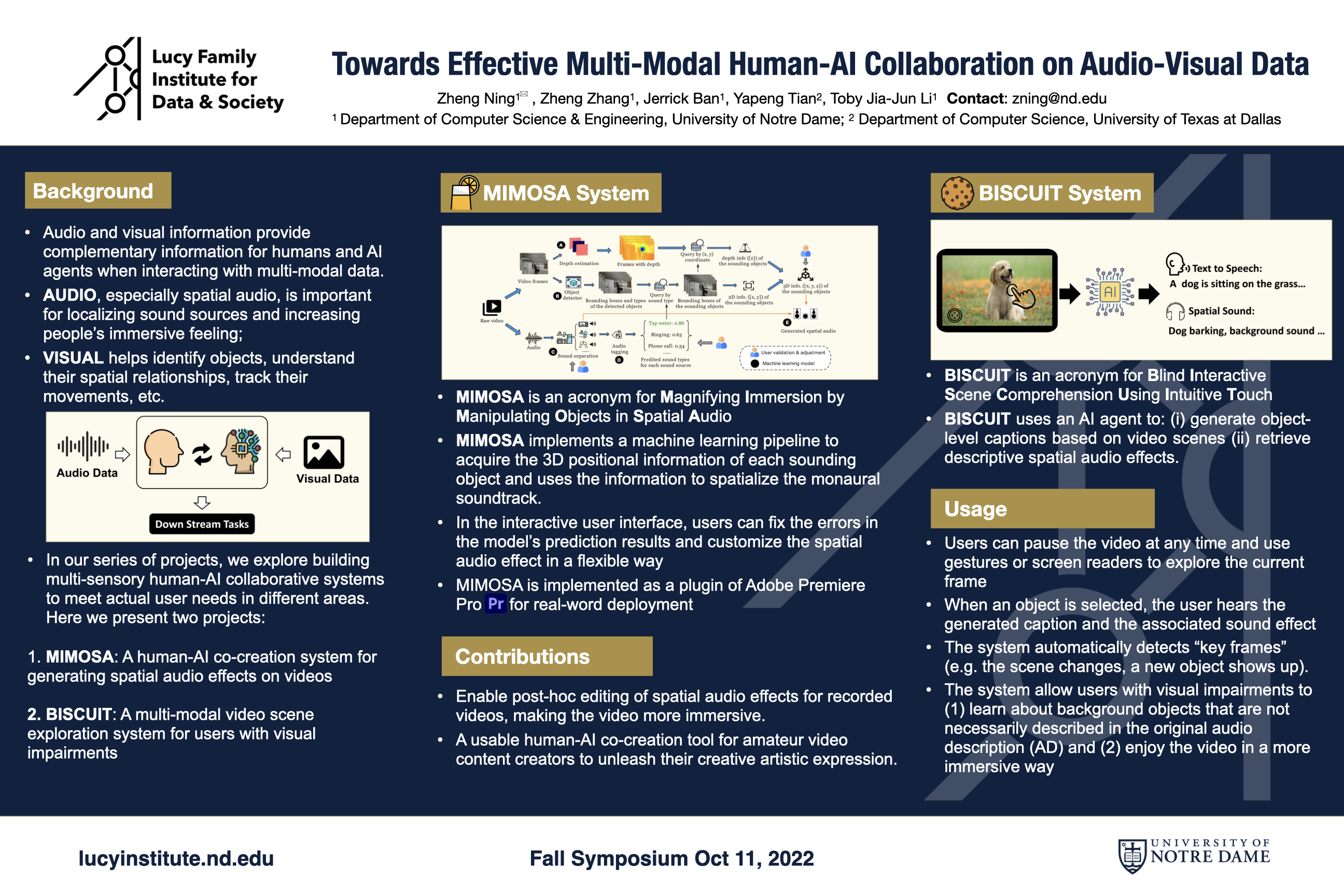

Topic: Towards Effective Multi-Modal Human-AI Collaboration on Audio-Visual Data

Abstract: In our series of projects, we explore building multi-sensory human-AI collaborative systems to meet actual user needs in different areas. Here we present two projects: MIMOSA: A human-AI co-creation system for generating spatial audio effects on videos; BISCUIT: A multi-modal video scene exploration system for users with visual impairments

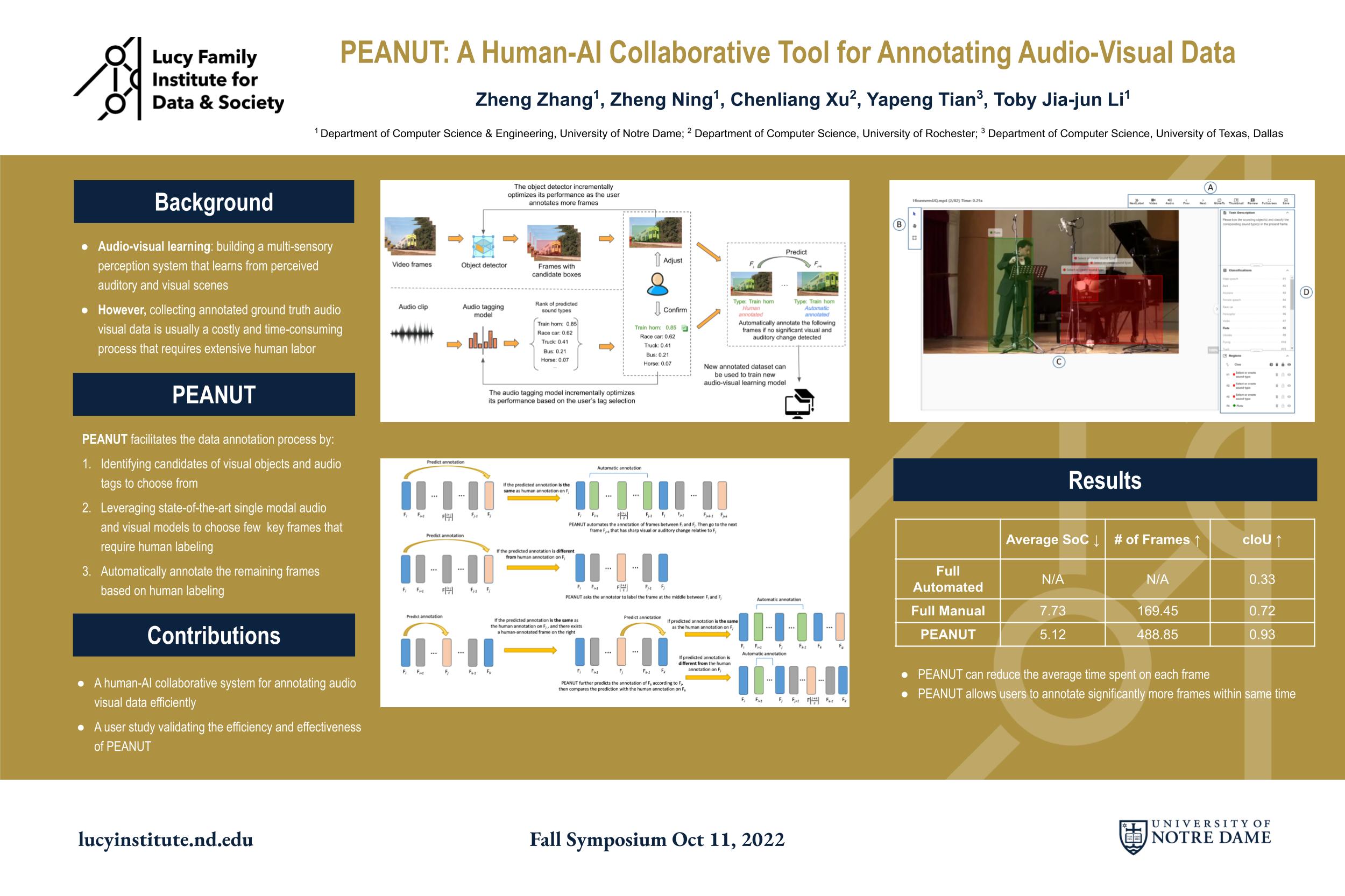

Topic: PEANUT: A Human-AI Collaborative Tool for Annotating Audio-Visual Data

Abstract: Annotating audio-visual datasets is laborious, expensive, and time-consuming. To address this challenge, we designed and developed an efficient audio-visual annotation tool called Peanut to accelerate the audio-visual data annotation process while maintaining high annotation accuracy.

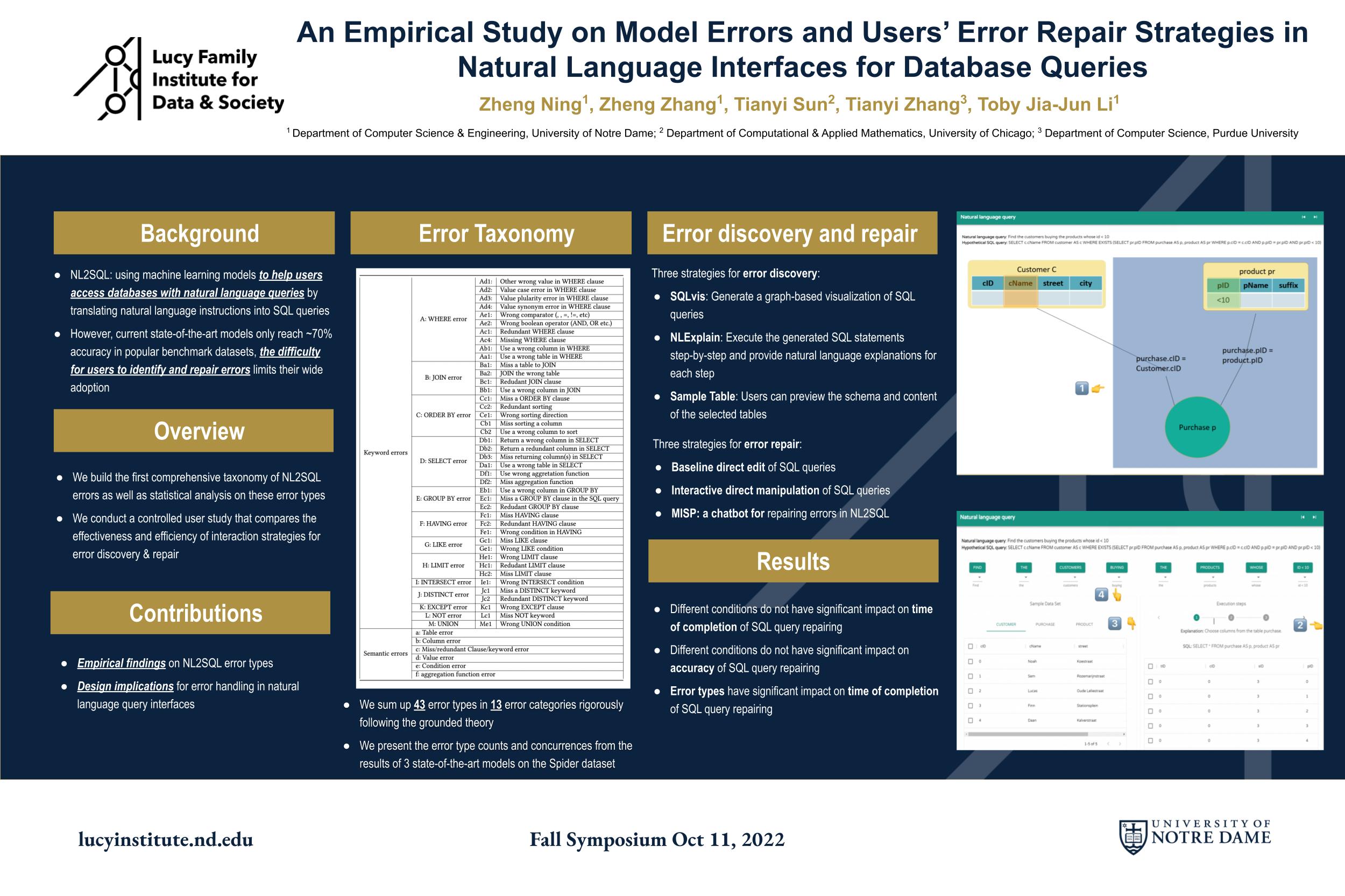

Topic:An Empirical Study on Model Errors and Users’ Error Repair Strategies in Natural Language Interfaces for Database Queries

Abstract: Recent advances in machine learning (ML) and natural language processing (NLP) have led to significant improvement in natural language interfaces for structured databases (NL2SQL). Despite the great strides, the overall accuracy of NL2SQL models is still far from being perfect. In this study, we analyzed the errors made by recent SOTA NL2SQL models and investigate the effectiveness of three interactive error-handling mechanisms.