Hi 👋👋 I am a fourth year Ph.D student in the Department of Computer Science and

Engineering (CSE) at University of Notre Dame.

My advisor is Prof. Toby Jia-Jun Li. Before joining Notre

Dame, I received dual Bachelor degrees in

Electrical and Electronic Engineering (EEE) from University of Electronic Science and

Technology of China (UESTC)

and University of Glasgow

(Graduated with First-class honor degree). I have also worked at Adobe and

Microsoft

as a research scientist intern.

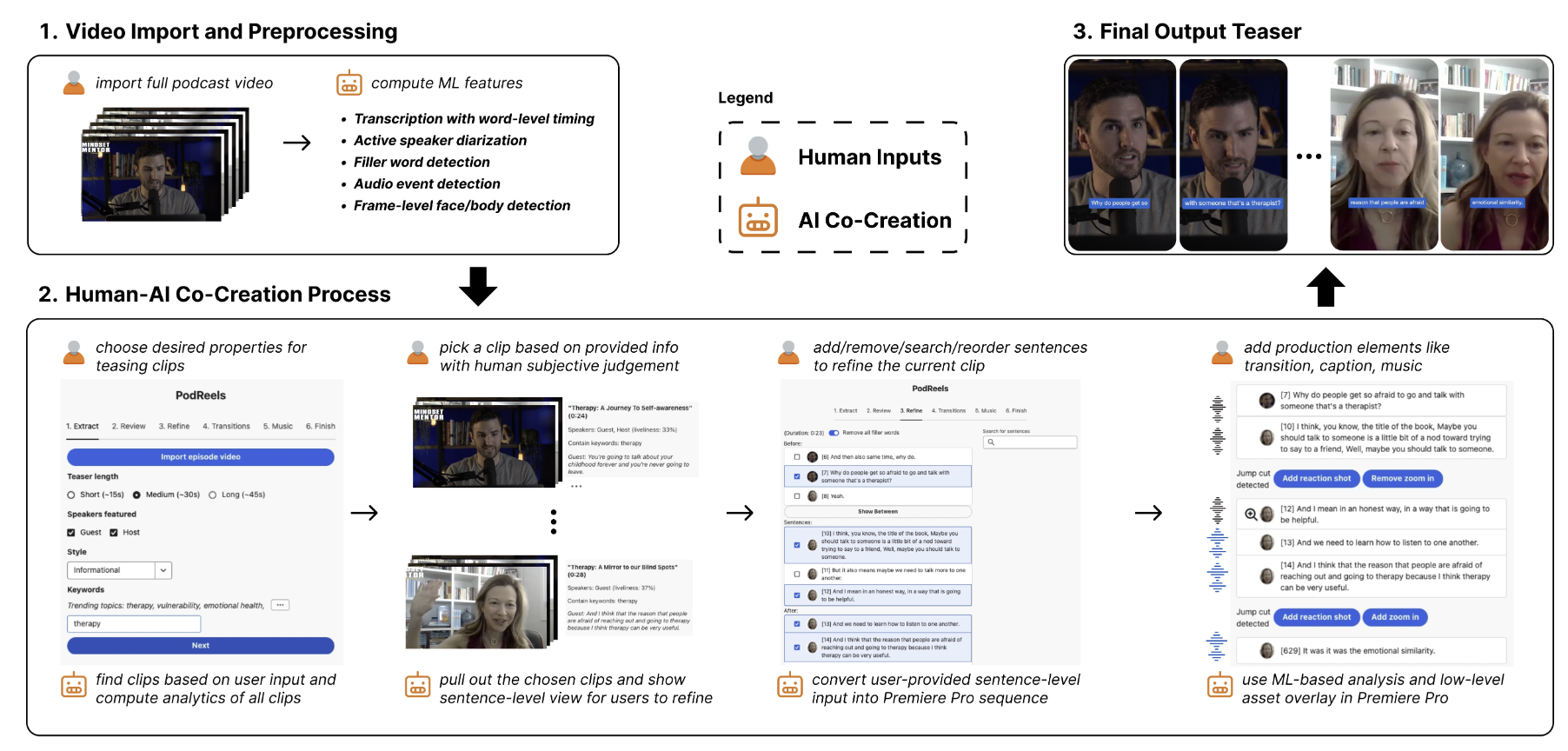

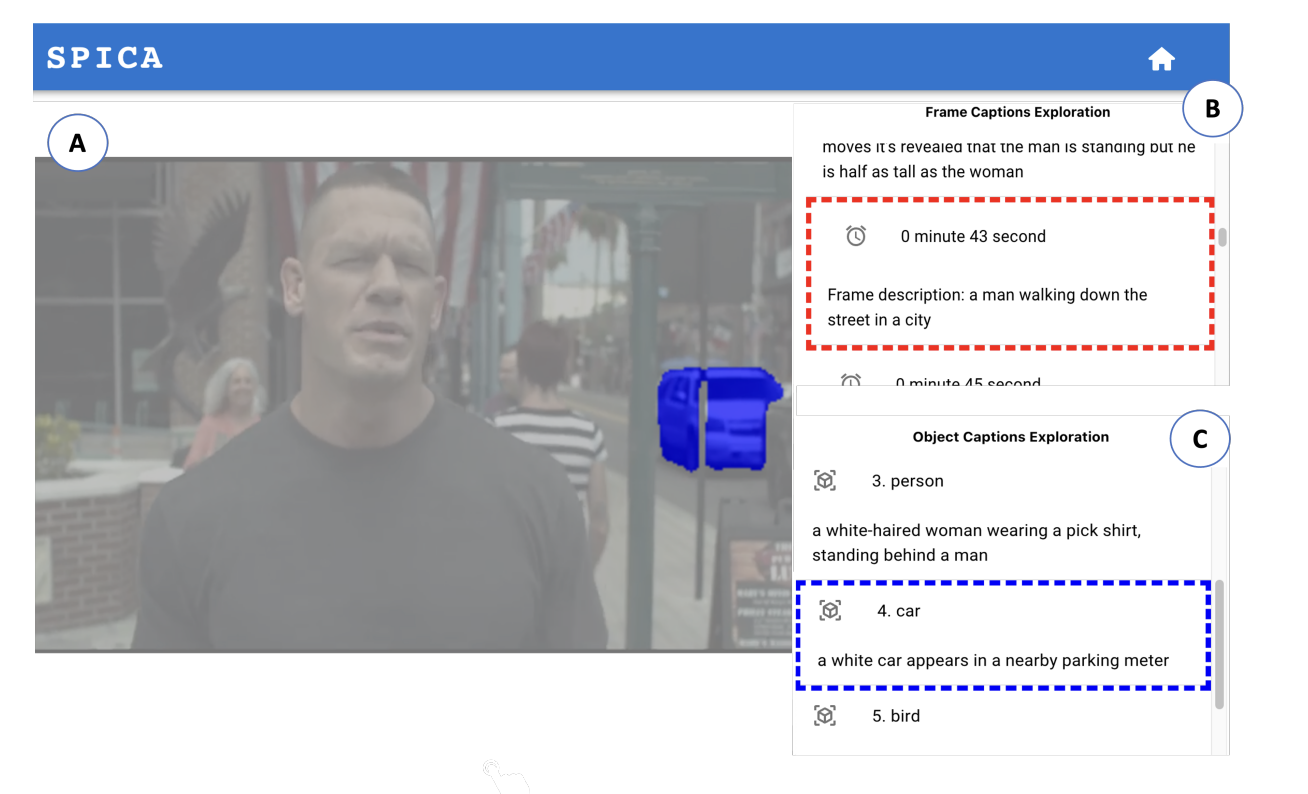

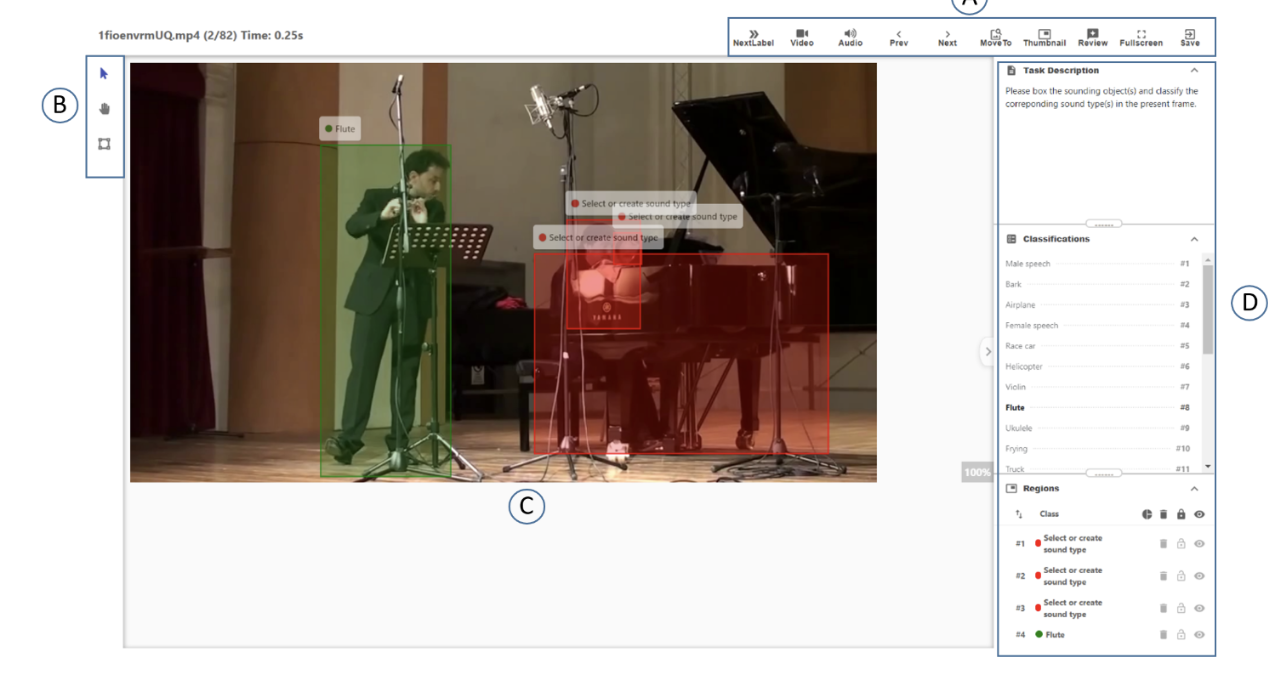

My research focus on human-AI interaction. I build interactive systems with multimodal AI models to help users engage with content across different modalities e.g. visual, audio, text. I have developed tools in video context, including multimodal data annotation (PEANUT@UIST'24), creating spatial audio effects for videos (MIMOSA@C&C'24), and enabling blind or low-vision users to consume video content through transforming visual information into layered, interactive audio descriptions (SPICA@CHI'24). More recently, my work looks at multimodal content representation and transformation, specifically how can we align the multimodal perceptions of human (like touch, smell, and sight) with the multimodal understanding capabilities of the AI agent, to streamline user workflows.

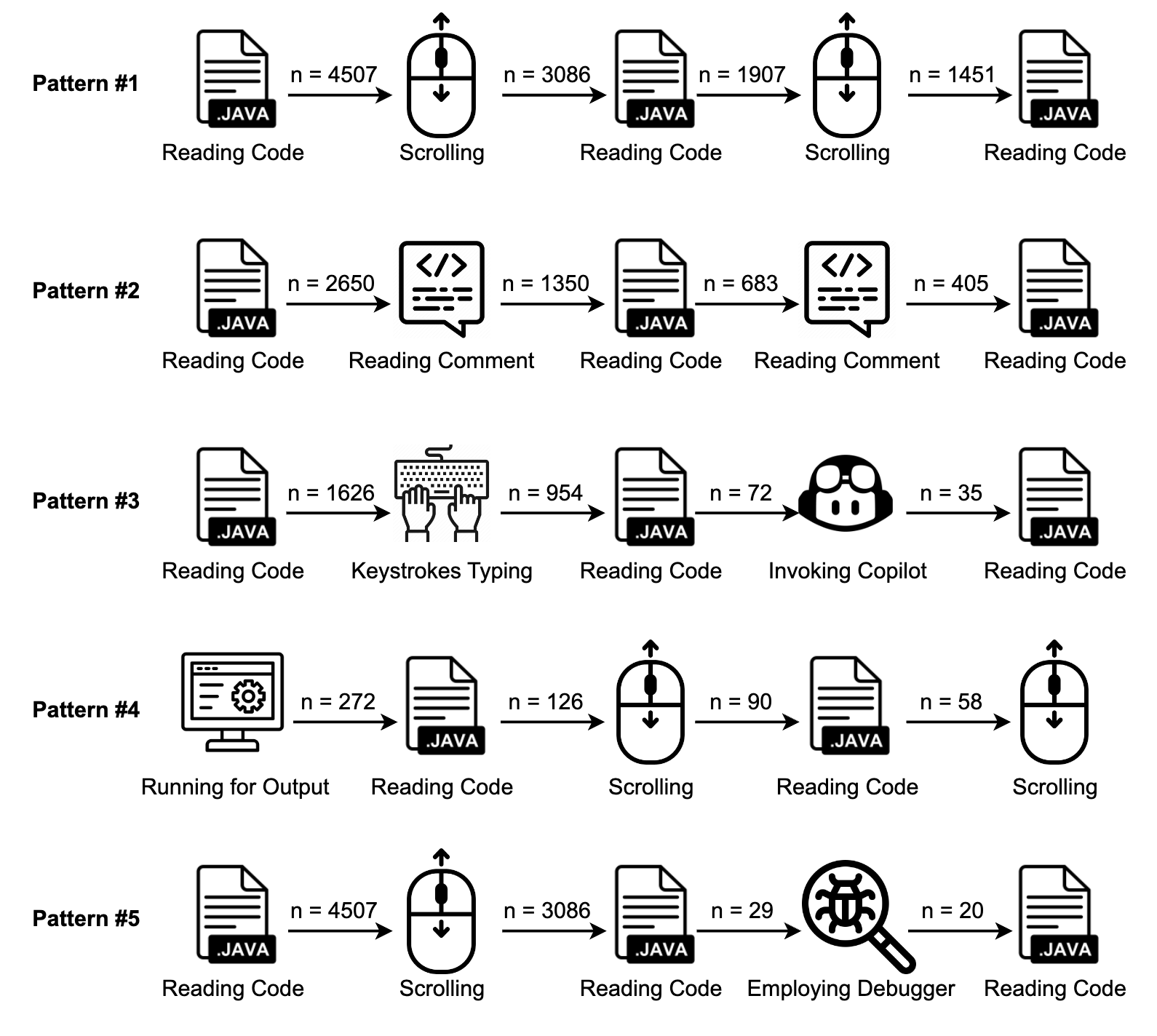

Developer Behaviors in Validating and Repairing LLM-Generated Code Using IDE and Eye Tracking [Paper]

Ningzhi Tang*, Meng Chen*, Zheng Ning, Aakash Bansal, Yu Huang, Collin McMillan, and Toby Li

2024 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC 2024)

SPICA: Interactive Video Content Exploration through Augmented Audio Descriptions for Blind or Low-Vision Viewers [Paper] [Project]

Zheng Ning, Brianna Wimer, Kaiwen Jiang, Keyi Chen, Jerrick Ban, Yapeng Tian,

Yuhang Zhao, and Toby Li

In Proceedings of the CHI Conference on Human Factors in Computing Systems

(CHI'24)

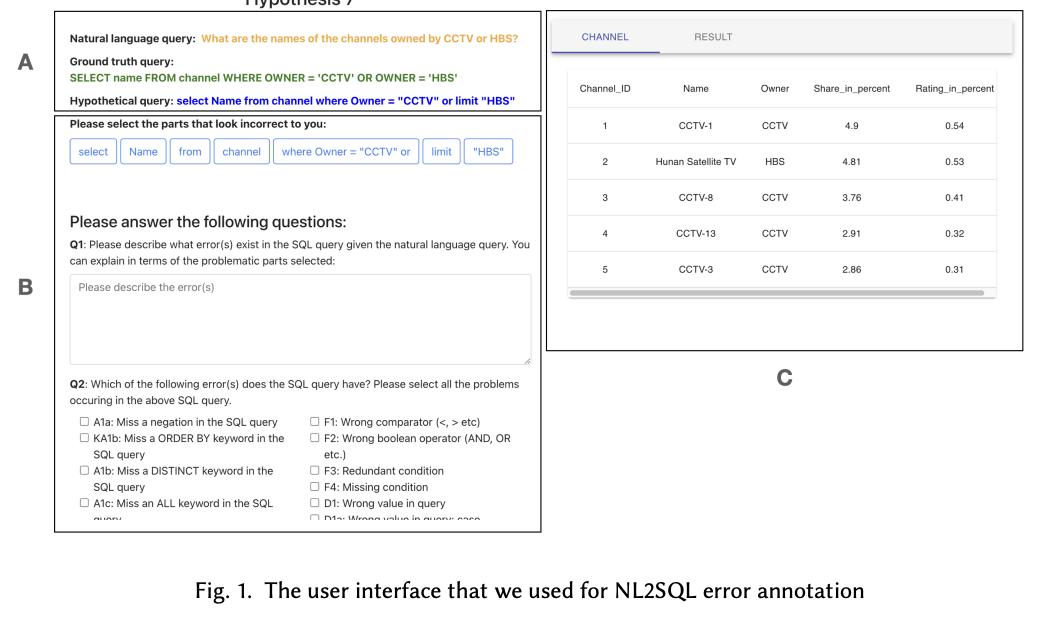

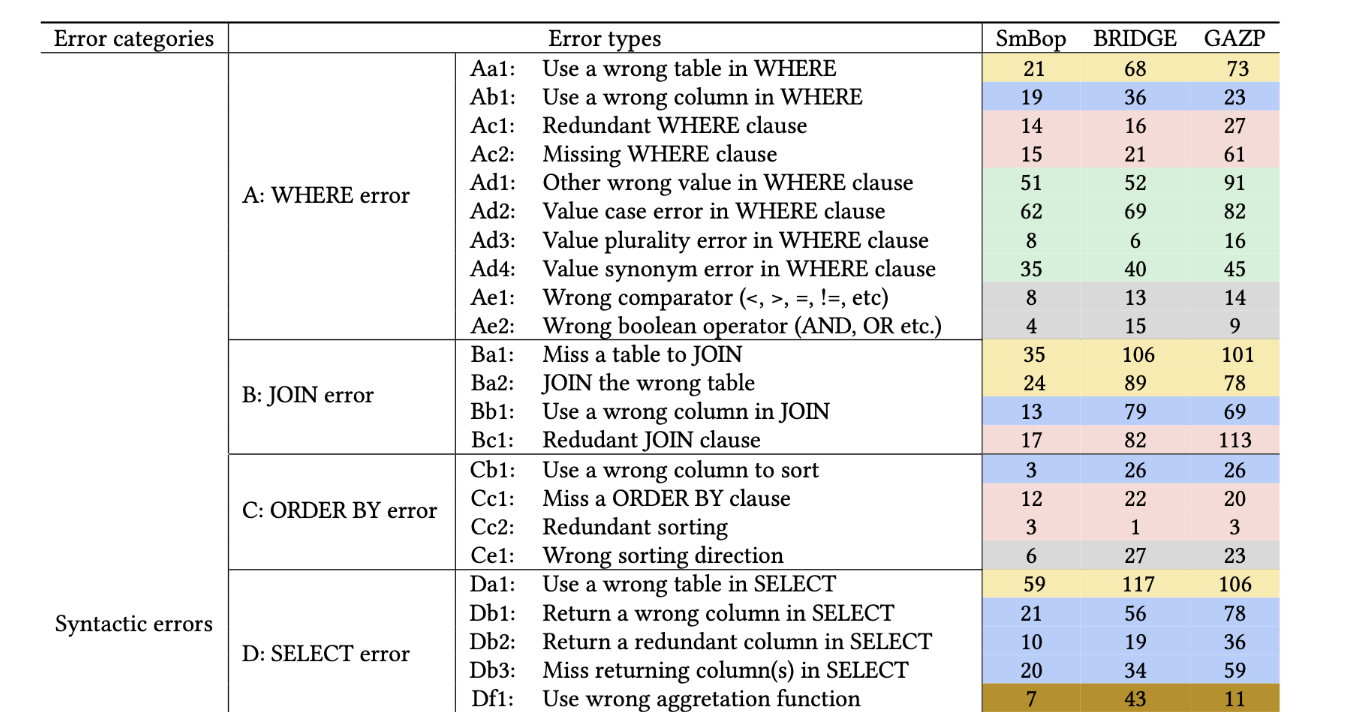

Insights into Natural Language Database Query Errors: From Attention Misalignment to User Handling Strategies [Paper]

Zheng Ning*, Yuan Tian*, Zheng Zhang, Tianyi Zhang, Toby Li

ACM Transactions on Interactive Intelligent Systems (TiiS) IUI-2023 special

issue

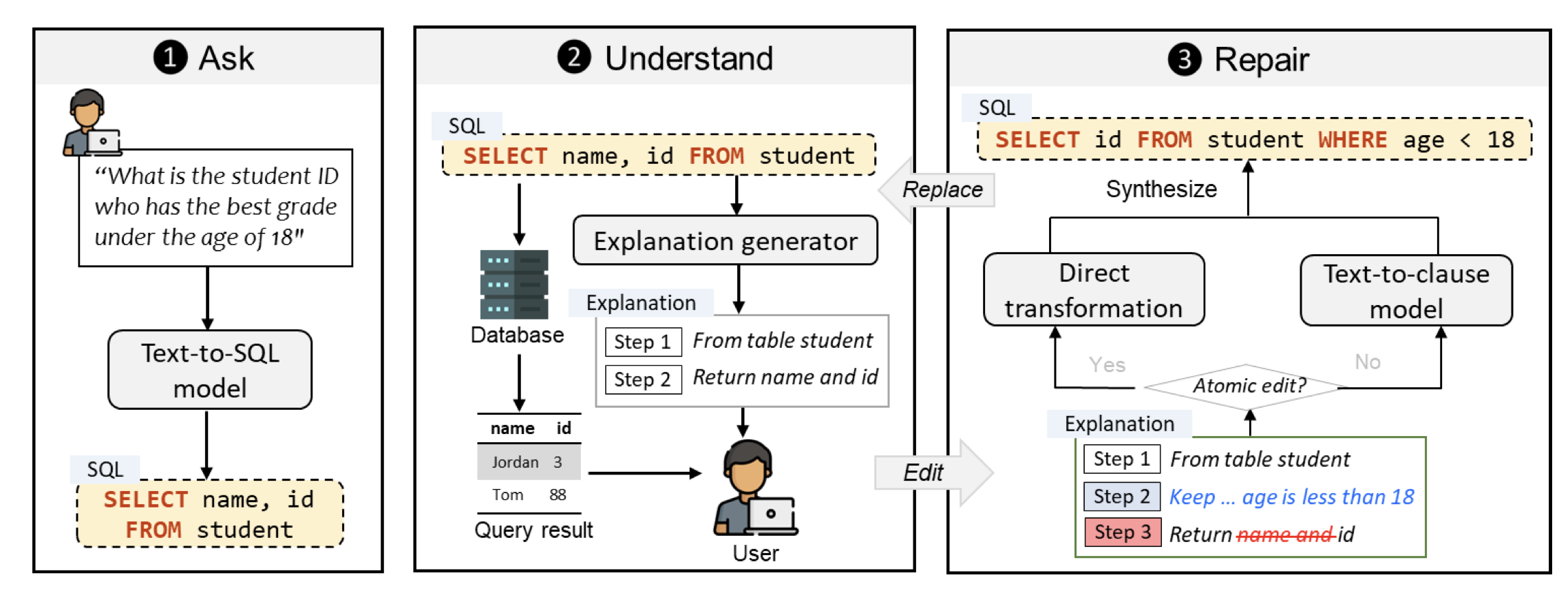

Interactive Text-to-SQL Generation via Editable Step-by-Step Explanations [Paper]

Yuan Tian, Zheng Zhang, Zheng Ning, Toby Jia-Jun Li, Jonathan K. Kummerfeld,

Tianyi Zhang

The 2023 Conference on Empirical Methods in Natural Language Processing

(EMNLP'23)

An Empirical Study of Model Errors & User Error Discovery and Repair Strategies in Natural Language Database Queries [Paper]

Zheng Ning*, Zheng Zhang*, Tianyi Sun, Tian Yuan, Tianyi Zhang, and Toby Li

The 26th International Conference on Intelligent User Interfaces

(IUI'23)